KV Cache in Nanogpt

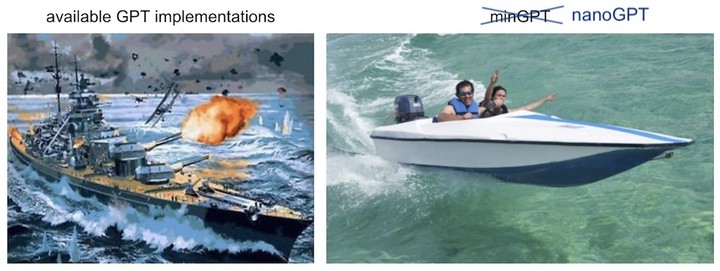

A modification of nanoGPT to use KV-Cache during inference. Using a KV Cache helps speed up inference since we don’t have to do attention re-computation over the entire generated sequence every time to generate the next tokens.