- mlsys

- transformer

- paper-summaries

- MLSys

- LLMs

- PPML

•

•

•

•

•

-

Paper Summary #14 - Physics of Language Models: Part 3.1, Knowledge Storage and Extraction

My notes from the Physics of Language Models series of papers.

-

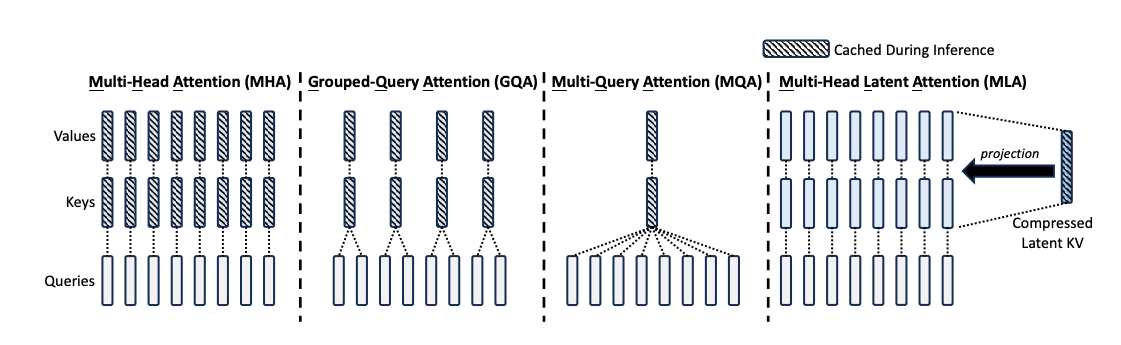

Understanding Multi-Head Latent Attention (MLA)

A mathematical and code deep-dive on one of the key innovations from Deepseek - Multihead Latent Attention (MLA)

-

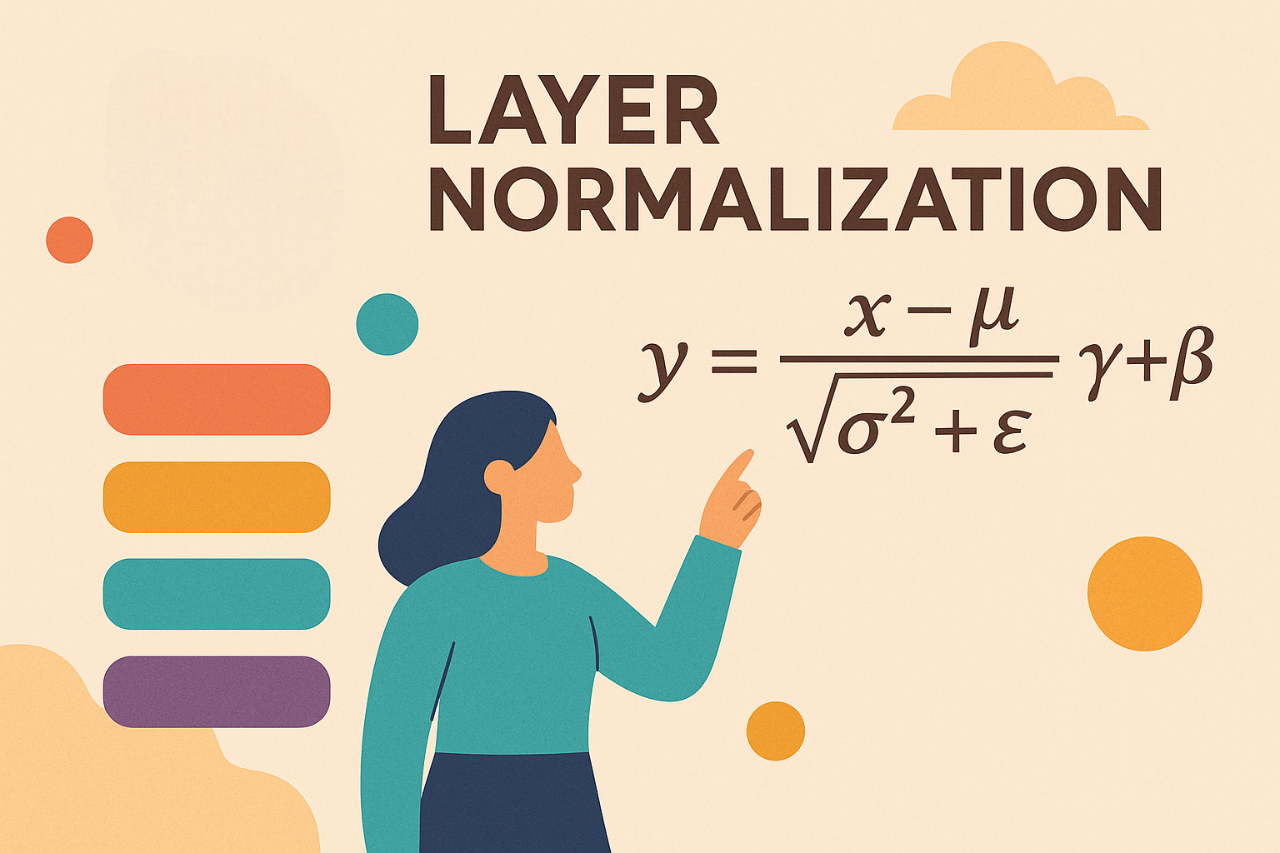

Deriving the Gradient for the Backward Pass of Layer Normalization

Understanding the math behind Layer Normalization and deriving the gradients for the backward pass.

-

Notes from GTC'25: CUDA Techniques to Maximize Compute and Instruction Throughput

My notes from the talk on maximizing compute and instruction throughput at NVIDIA GTC 2025.

-

Notes from GTC'25: CUDA Techniques to Maximize Memory Bandwidth and Hide Latency - Part 2

Second part of my notes from the talk on maximizing memory bandwidth at NVIDIA GTC 2025.

-

Notes from GTC'25: CUDA Techniques to Maximize Memory Bandwidth and Hide Latency - Part 1

First part of my notes from the talk on maximizing memory bandwidth at NVIDIA GTC 2025.

-

Faster Cross-Encoder Inference: Unleashing torch.compile for speed

A quick writeup on accelerating a Jina Cross-Encoder using torch.compile

-

Paper Summary #13 - Physics of Language Models: Part 2.1, Grade-School Math and the Hidden Reasoning Process

My notes from the Physics of Language Models series of papers.

-

Paper Summary #12 - Image Recaptioning in DALL-E 3

The image recaptioning technique used in DALL-E 3 was extended to videos in Sora.

-

Paper Summary #11 - Sora

OpenAI announced a ground-breaking text-to-video diffusion model capable of generating high-definition videos up to 60 seconds long.